Research Computing

Purdue IT, via the Rosen Center for Advanced Computing, provides Purdue and the nation with world class scientific computing facilities and expertise to impact the world, enable discovery, and train future scientists. RCAC’s goal is to be a one-stop provider of choice - providing high-performance computing at the highest proven value.

We do this with three lines of service:

- High-performance computing, networks, and data services via our cost-effective community cluster program, and nationally via Anvil and NSF ACCESS/NAIRR.

- Research software engineering, with strengths in science gateways, AI, scientific data management, and augmented/extended reality (XR)

- Advanced domain expertise to support the application of computational methods by Purdue researchers

Contact: rcac-help@purdue.edu

Principles

Manage Research Risk

- Purdue will follow recognized best practices:

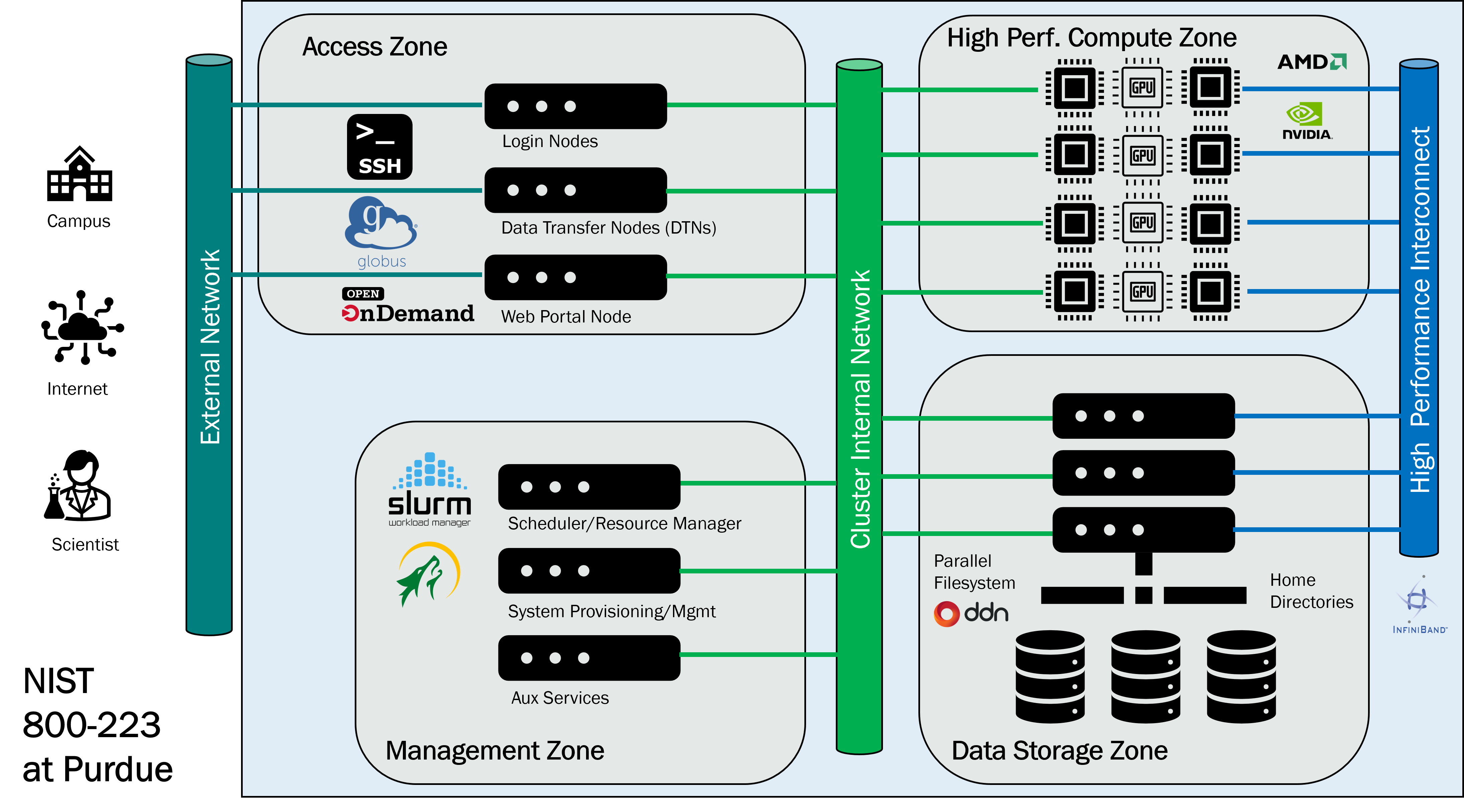

- NIST 800-223 (High-Performance Computing (HPC) Security: Architecture, Threat Analysis, and Security Posture) and 800-234 (High-Performance Computing (HPC) Security Overlay)

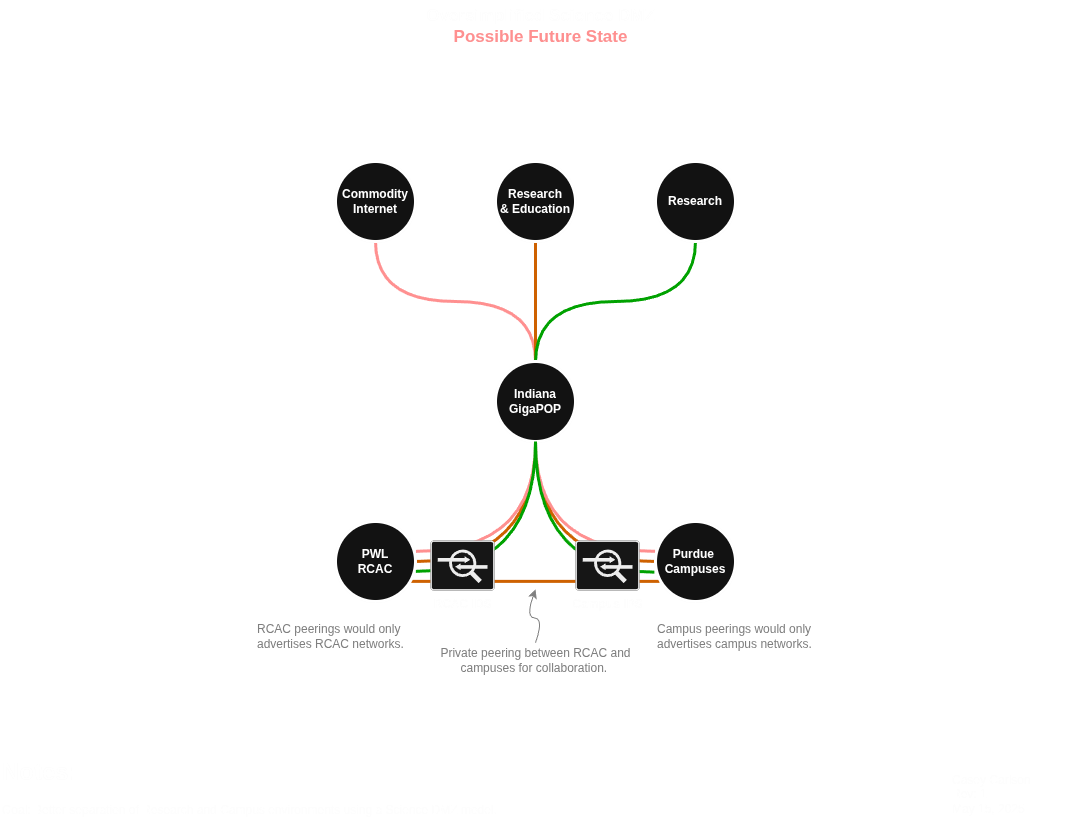

- Network security controls will be implemented in line with best practices in the Science DMZ architecture

- Research networks, data and computation will be handled within designated research-focused resources, distinct from general-purpose offerings.

- Sensitive/restricted data is handled by a limited number of specific resource(s) (eg. Weber/Rossmann) within the research ecosystem

Distinct Resource Boundaries

- A system’s management zone is not exposed outside of the single resource boundary

- A system’s data storage zone is not exposed outside of the single resource boundary, except through data transfer node (DTN) interfaces

- A system’s high-performance interconnect is not exposed or bridged outside of the single resource boundary

- With the exception of the Data Depot, POSIX filesystems do not span multiple resources

- Global data resources do exist, but are distinct from clusters (Global resources include Fortress, Depot)

- Access to global data resources or 3rd party systems is via API interfaces (HSI, Globus, S3, http, xrootd), not POSIX mounts

Cohesive System Design

- An HPC system is planned as a balanced, coherent whole, rather than a collection of parts.

- A system will not mix incompatible technologies (OEMs, interconnects, accelerators, CPU instruction sets, etc)

- A system will not introduce complexity through duplicated features

- The balance of network, storage, FLOPs, size, power, and cooling to support the anticipated application scale and mix will be deliberately planned

Researcher-Developed Solutions

- Clusters, clouds, and data services are provided “as a service” for running and developing scientific applications and gateways.

- Solutions developed through a funded research software engineering (RSE) project will reside in the managed trust boundary, or external provider if appropriate

- Datacenter colocation, bare-metal server hosting, or self-managed systems will reside outside the managed trust boundary, and supported through other IT service offerings and networks.

Diagrams